Submitting feedback

When monitoring your production generative data with our SDK, you may need to capture feedback about the quality of your generative output.

Gentrace supports this by combining the techniques of production evaluation and API / SDK evaluation.

Using these techniques, you can set up many different and/or multiple simultaneous feedback mechanisms.

Gentrace's browser SDK (@gentrace/browser) and the in-app default feedback evaluation method are deprecated and no longer recommended. For greater flexibility and control, we recommend the workflow below.

Create a placeholder evaluator

First, create a feedback evaluator to which you'll submit feedback.

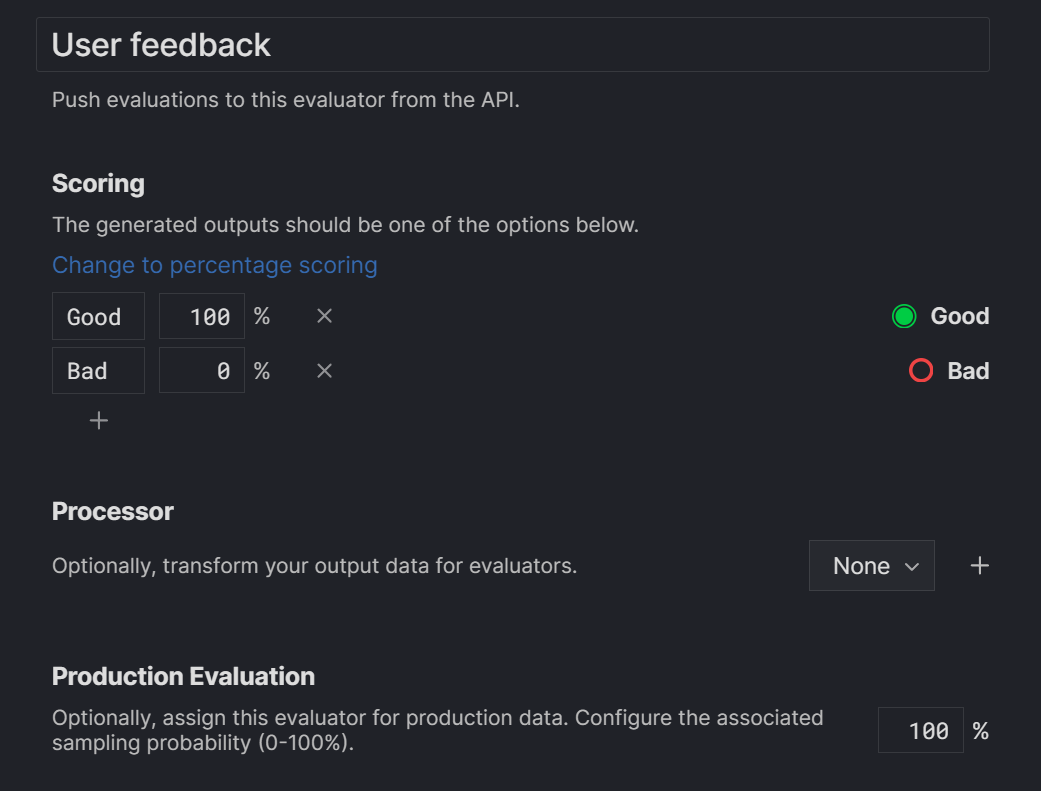

The evaluator should use the API evaluator template located under "Human / External" on the new evaluator page.

Ensure the production evaluation sampling probability is set to 100% so that it will accept feedback on any run.

Here's an example evaluator that receives a thumbs up / thumbs down rating. This evaluator, and any other API evaluator, can always optionally receive a note with the grade.

Submit grades (and notes)

Now, you can submit feedback to the new feedback evaluator using our create evaluation API.

Ensure you have the IDs of both the run and the evaluator for which you intend to submit.

Then, submit feedback using the SDK or API methods.

Example:

- TypeScript

- Python

typescript

python

More details

For detailed instructions on setting up API evaluators, refer to our external evaluator guide.

For more information on evaluating in production, refer to our Production evaluation documentation.